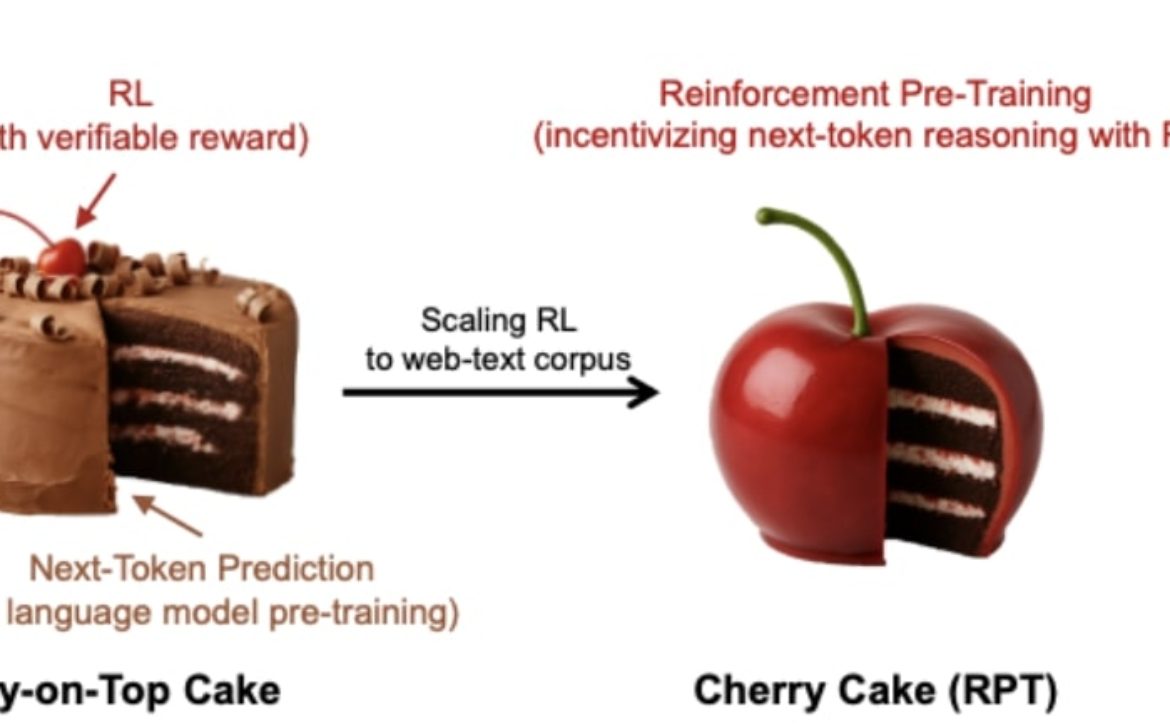

Microsoft and China AI Research Possible Reinforcement Pre-Training Breakthrough

Reinforcement Pre-Training (RPT) is a new method for training large language models (LLMs) by reframing the standard task of predicting the next token in a sequence as a reasoning problem solved using reinforcement learning (RL). Unlike traditional RL methods for LLMs that need expensive human data or limited annotated data…

Read More

0